Safe Robot Learning

Learning can be used to improve the performance of a robotic system in a complex environment. However, providing safety guarantees during the...

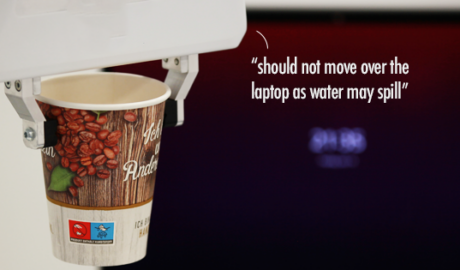

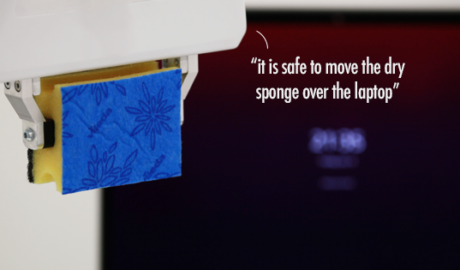

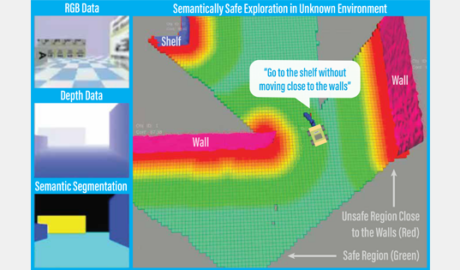

Semantic Control for Robotics

For robots to safely interact with people and the real world, they need the capability to not only perceive but also understand their surroundings in...

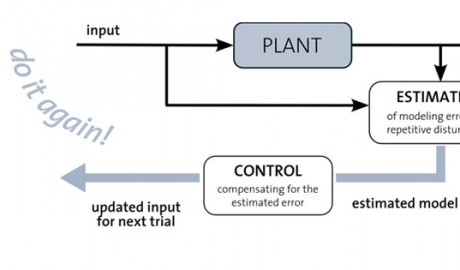

Foundations of Robot Learning, Control, and Planning

Learning algorithms hold great promise for improving a robot's performance whenever a-priori models are not sufficiently accurate. We have developed...

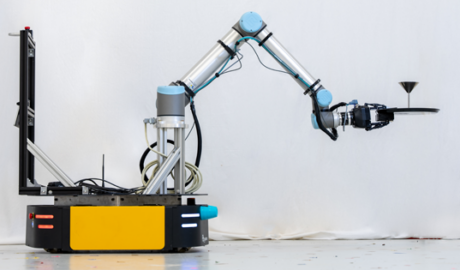

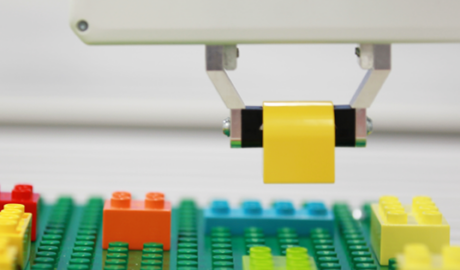

Mobile Manipulation

We enable robots to move around the environment while manipulating and interacting with objects. Our work includes nonprehensile manipulation, which...

Deep Neural Networks and Foundation Models for Robotics

We aim to develop a platform-independent approach that utilizes deep neural networks (DNNs) to enhance classical controllers to achieve...

Self-Driving Vehicles

As part of the SAE Autodrive Challenge, students in our lab will be working on designing, developing, and testing a self-driving car over the next...

Multiagent Coordination and Learning

There are tasks that cannot be done by a single robot alone. A group of robots collaborating on a task has the potential of being highly efficient,...

Aerial Robotics Applications

This series of projects aims to address the critical challenges of deploying aerial robotics in various applications or industries. Mining This...

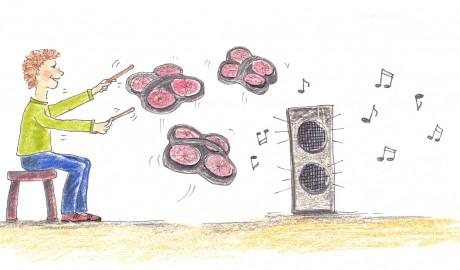

Hardware and Software Co-Design

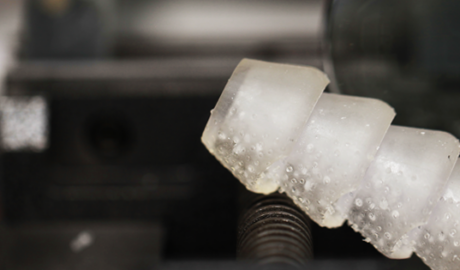

Hardware and software co-design is one of our future research directions. Form and function are co-dependent in robotics as they are in nature. For...

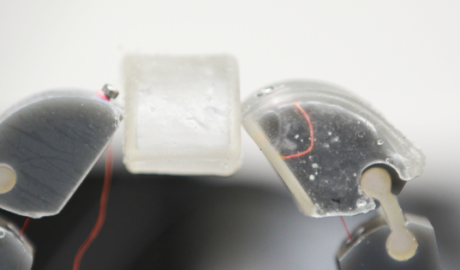

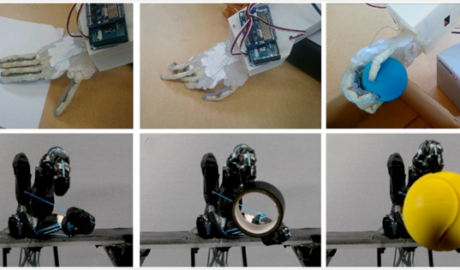

Learning with Contacts

Learning through contact is one of our future directions. As robots venture from traditional static environments to versatile ones, they need to...