We are happy to announce that the Safe Robot Learning Competition has been completed. We had 36 registered teams, and 9 teams participated in the final phase of the competition. Congratulations to our winners! We hosted a virtual award ceremony with invited talks from the winners on Wednesday, October 26 @ 10 am Kyoto time (October 25 @ 9 pm EDT; October 26 @ 3 am CEST). If you missed our award session, you can watch it using the link below.

Introduction

Advances in robotics promise improved functionality, efficiency and quality with an impact on many aspects of our daily lives. Example applications include autonomous driving, drone delivery, and service robots. However, the decision-making of such systems often faces multiple sources of uncertainty (e.g., incomplete sensory information, uncertainties in the environment, interaction with other agents, etc.). Deploying an embodied system in real-world and possibly commercial applications requires both (i) safety guarantees that the system acts reliably in the presence of the various sources of uncertainties and (ii) efficient deployment of the decision-making algorithm to the physical world (for performance and cost-effectiveness). As highlighted in the “Roadmap for US Robotics”, learning and adaptation are essential for next-generation robotics applications, and guaranteeing safety is an integral part of this.

In our recent review paper, we have observed that there is a growing interest in developing safe robot learning approaches from both the control and reinforcement learning communities. While the safe learning control problem is equally recognized as an important problem in both communities, there is a lack of comparison or systematic head-to-head evaluation of the approaches developed by the two communities. This is often due to the different sets of assumptions made in the process of developing the decision-making algorithm (also known as “controller”). In 2021, our team organized two workshops on safe robot learning at the IROS conference and the NeurIPS conference, respectively, to foster interdisciplinary discussions on the pressing challenges related to the design and deployment of robot learning algorithms to real-world applications.

With this competition, our goal is to bring together researchers from different communities to (1) solicit novel and data-efficient robot learning algorithms, (2) establish a common forum to compare control and reinforcement learning approaches for safe robot decision making, and (3) identify the shortcomings or bottlenecks of the state-of-the-art algorithms with respect to real-world deployment.

Relevance to IROS Community

Our goal closely aligns with the theme of this year’s IROS conference–“Embodied AI for a Symbiotic Society’’. Learning will be an essential component in every aspect of the robot software stack and safety is paramount in real-world applications. Through this competition, we wish to uncover what are the essential requirements to facilitate the transfer of robot learning algorithms from simulation to the physical world and set the ground to identify future research directions that combine the expertise from the control and learning communities.

We also hope that easy-to-use and cheaply reproducible evaluation environments (e.g., through physics-based simulation) will increase accessibility to research in the area of safe robot learning and speed up its progress through quantitative apples-to-apples comparisons.

Agile and safe flight has many potential applications, including search and rescue—where agility is required to cover as much area as possible under the constraint of limited battery life while safe flight is needed to avoid catastrophic crashes or harm to humans. This competition will give teams the opportunity to showcase how these two capacities could be combined.

One of the envisioned outcomes for the competition is a collaborative paper where the top 3 teams (in each track) will describe their methodologies and the competition results (including simulation and experiment phases) will be used to illustrate the features of the different approaches and compare them.

Competition Style

The competition includes two simulation (virtual) phases and an experimental (remote) phase. The fully virtual simulation components of the competition will be based on an open-source software benchmark suite we are currently developing: safe-control-gym. In the final experimental phase of the competition (to facilitate participation amid COVID) we will provide remote access to real robotic hardware via high-speed internet connections to our Flight Arena at the University of Toronto Institute for Aerospace Studies in Toronto, Canada.

Task

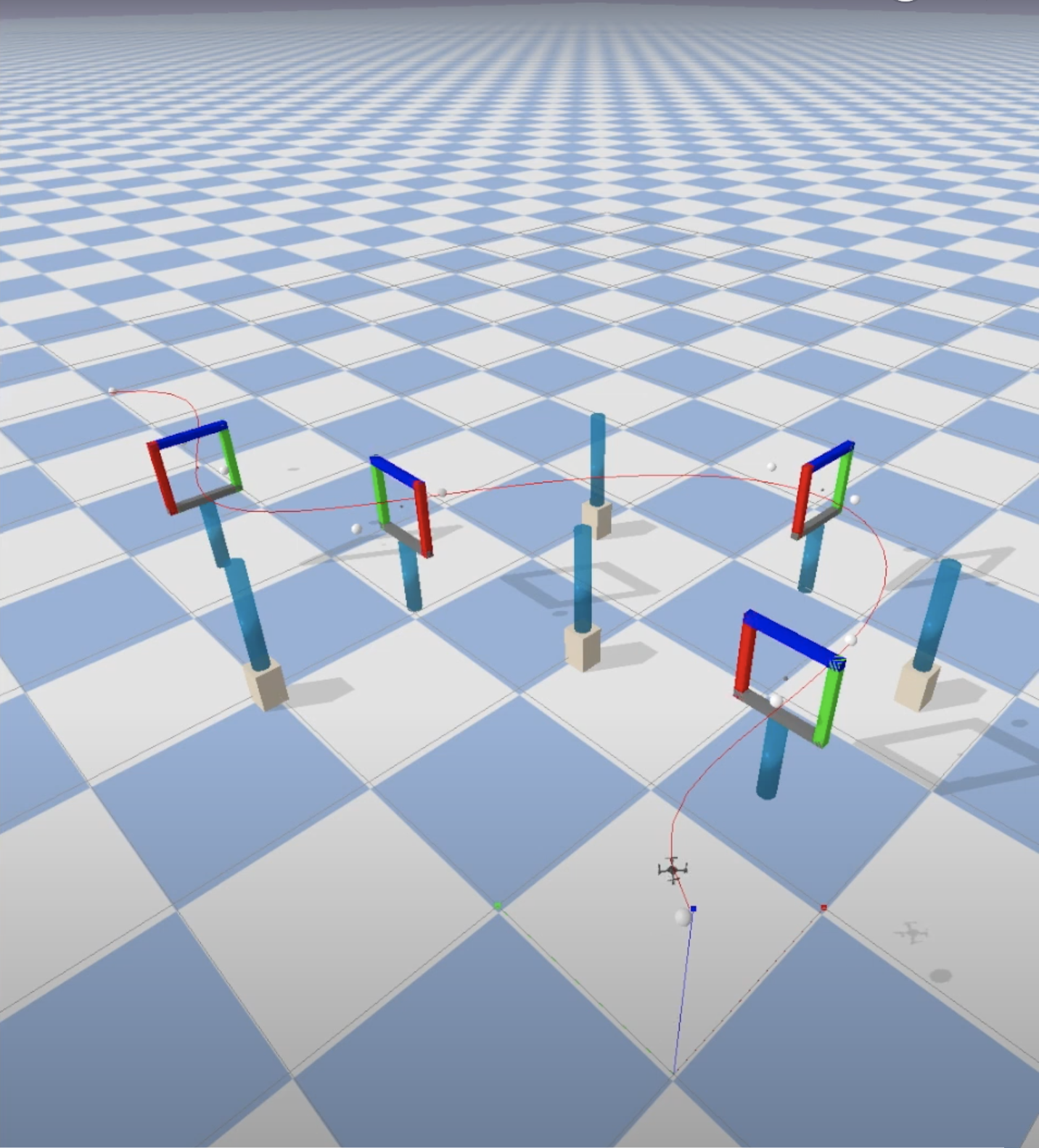

The task we consider is based on a nano quadrotor platform (Bitcraze’s Crazyflie). The quadrotor is required to navigate, as fast as possible, through an environment with a set of obstacles. The participating teams are required to show that their algorithms can safely navigate the quadrotor through increasingly cluttered environments and learn to cope with the following types of uncertainties: (1) variations/uncertainties in the obstacle positions and shapes, (2) vehicle modifications such as an added mass or payload, and (3) unforeseen aerodynamic disturbances (e.g., wind or downwash from other moving quadrotors). Safe/robust learning and adaptation approaches will be required to capture the unknown or unstructured uncertainties present in the setup. The task is considered to be unsuccessful if there is any collision with the obstacles and is unsafe if a set of safety constraints are violated (e.g., off-track maneuvers, exceeding a minimum safety distance to obstacles, exceeding a maximum completion time). The evaluation criteria will be based on the performance as measured by (1) completion time, (2) safety constraint satisfaction, and (3) the maximal clutteredness of the environment that can be accounted for by the algorithm.

Competition Phases

Preliminary phase (simulation): Registered participants are given access to the virtual platform to get familiar with the software setup. This initial test environment will be simulated, and a sufficiently accurate model of the robot and the cluttered environment will be provided for the design of navigation algorithms. The teams need to demonstrate basic navigation capabilities.

Selection phase (simulation): Registered participants will be given a more challenging environment subject to uncertainties (e.g., variations/uncertainties in the obstacle positions and shapes, vehicle modifications such as an added mass or payload, and unforeseen aerodynamic disturbances) to resemble real-world challenges. The teams are expected to demonstrate the capabilities of the robot learning algorithms to cope with both static and dynamic uncertainties in increasingly challenging simulated environments (e.g., with narrower spacing and irregular geometries). The evaluation will be based on a fixed number of trials corresponding to randomly generated obstacle configurations.

Final phase (experimental): The selected teams will test the proposed algorithms in a real experimental setup (via remote access to the University of Toronto’s Flight Arena). Our team—in Toronto and (if restrictions allow) in Kyoto—will facilitate remote access to the robot platform in Canada for both online and onsite IROS participants. Similar to the initial selection phase, the finalist teams will need to demonstrate the ability of the algorithm to cope with static uncertainties and dynamic uncertainties. On the competition days, we will have two scenarios: (A) environments with obstacles and disturbances resembling those provided in the simulated environment in the selection phase and (B) environments with novel obstacles and disturbances. Each scenario will consist of a fixed number of trials corresponding to obstacle configurations with increasing difficulties.

Note that, for an algorithm that involves continuous interaction with the environment (e.g., reinforcement learning or adaptive control approaches), safety constraint violations will be checked during both the learning stage and the test stage.

Scoring System

Unlike past quadrotor slalom competitions, our proposal does not focus on the vision aspect of the problem but rather on the quantifiable safety and generalizability of learning-based control laws. To do so, we will score approaches not only on pure flight performance (shortest time, shortest distance) but also on safety (e.g., collision or near-collision under perturbed state estimation, inertial properties, obstacle positions, etc.). The overall score of the flight will be computed based on three criteria:

- Performance: The amount of time that is required for the quadrotor to navigate through the environment.

- Safety constraint violation: The number of violations of spatial constraints (i.e., off-track maneuvers, exceeding a minimum safety distance to obstacles) and temporal constraint (i.e., exceeding a maximum completion time).

- Clutteredness of the environment: This criterion characterizes the difficulty of the task. This would be measured by the percentage of volume being occupied by the static and dynamic obstacles.

The quadrotors are encouraged to fly as fast as possible and at the same time not violate a set of predefined constraints (e.g., virtual tracks, closest distance with static and dynamic obstacles, and time constraints). Any violations will result in a penalty in the score. The clutteredness of the environment will be a weighting factor applied to the performance reward and the safety constraint violation penalty.

Submission system style: We will use GitHub’s Pull Request system to submit the source code of the learning-based solution/control approach. These controllers will be automatically executed and scored against the simulation environments as well as—for the finalist teams only—transferred to our Toronto-based team to be asynchronously run on the experimental quadrotor hardware.

If you have any questions related to the competition, please contact Jacopo Panerati at jacopo.panerati@utoronto.ca.

Competition Schedule

July 31: Registration form link (now open), alpha codebase link

August 14: Release of Development Scenarios

August 18: Release of sim2real Expansion

August 29: Initial Release of Competition Tasks in Simulation

October 16: Deadline for Code Submission Tested in Simulation (submission instructions) & Selection of Finalists

October 16 – October 22: Hardware Evaluation with Finalist Teams (remotely on the testbed in Toronto)

October 23: Final Code Submission Deadline

October 26: Award ceremony

November 1 – December 25: Dissemination

Winning Teams

Congratulations to the winning teams!

Team H^2

Niu Xinyuan, Hashir Zahir, and Huiyu Leong from Singapore

Team Ekuflie

Michel Hidalgo, Gerardo Puga, Tomas Lorente, Nahuel Espinosa, and John Alejandro Duarte Carrasco from Ekumen

Team ustc-arg

Kaizheng Zhang, Jian Di, Tao Jin, Xiaohan Li, Yijia Zhou, Xiuhua Liang, and Chenxu Zhang from the University of Science and Technology of China

Prizes

Kindly supported by Bitcraze:

1st Place: Bitcraze Crazyflie AI Bundle

2nd Place: Bitcraze Crazyflie STEM Ranging Bundle

3rd Place: Bitcraze Crazyflie STEM Bundle

Award Ceremony Program

Wednesday October 26 10:00 — 13:00 (Kyoto) | Tuesday October 25 21:00 — 24:00 (Toronto) | Wednesday October 26 3:00 — 6:00 (Berlin)

Schedule (Kyoto Time):

10:00 — 10:15: Opening Remarks (Angela Schoellig, TUM and University of Toronto)

10:15 — 10:35: Safe Aerial Robotics and Past Competitions (Antonio Loquercio, UC Berkeley)

10:35 — 10:45: safe-control-gym (Adam W. Hall, University of Toronto)

10:45 — 10:55: sim2real with Crazyflies (Spencer Teetaert, University of Toronto)

10:55 — 11:00: Competition Rationale (Jacopo Panerati, University of Toronto)

11:00 — 11:15: Prizes Announcement and Results (Kimberly McGuire, Bitcraze)

11:15 — 11:25: Break

11:25 — 12:25: Invited Talks from the Top 3 Teams (H^2, Ekuflie, and ustc-arg)

12:25 — 12:55: Moderated Discussion

12:55 — 13:00: Closing Remarks

Organizing Committee

Angela Schoellig, TUM and University of Toronto

Davide Scaramuzza, University of Zurich

Nicholas Roy, Massachusetts Institute of Technology

Vijay Kumar, University of Pennsylvania

Todd Murphey, Northwestern University

Sebastian Trimpe, RWTH Aachen University

Mark Mueller, University of California Berkeley

Jose Martinez-Carranza, Instituto Nacional de Astrofisica Optica y Electronica

SiQi Zhou, University of Toronto and Vector Institute

Melissa Greeff, University of Toronto and Vector Institute

Jacopo Panerati, University of Toronto and Vector Institute

Yunlong Song, University of Zurich

Leticia Oyuki Rojas Pérez, Instituto Nacional de Astrofisica Optica y Electronica

Antonio Loquercio, University of California at Berkeley

Wolfgang Hönig, Technical University of Berlin

Spencer Teetaert, University of Toronto